Any Consequence That Decreases the Likelihood of a Behavior Occurring Again Is

Learning Objectives

Past the end of this section, you volition exist able to:

- Define operant conditioning

- Explain the deviation between reinforcement and penalty

- Distinguish between reinforcement schedules

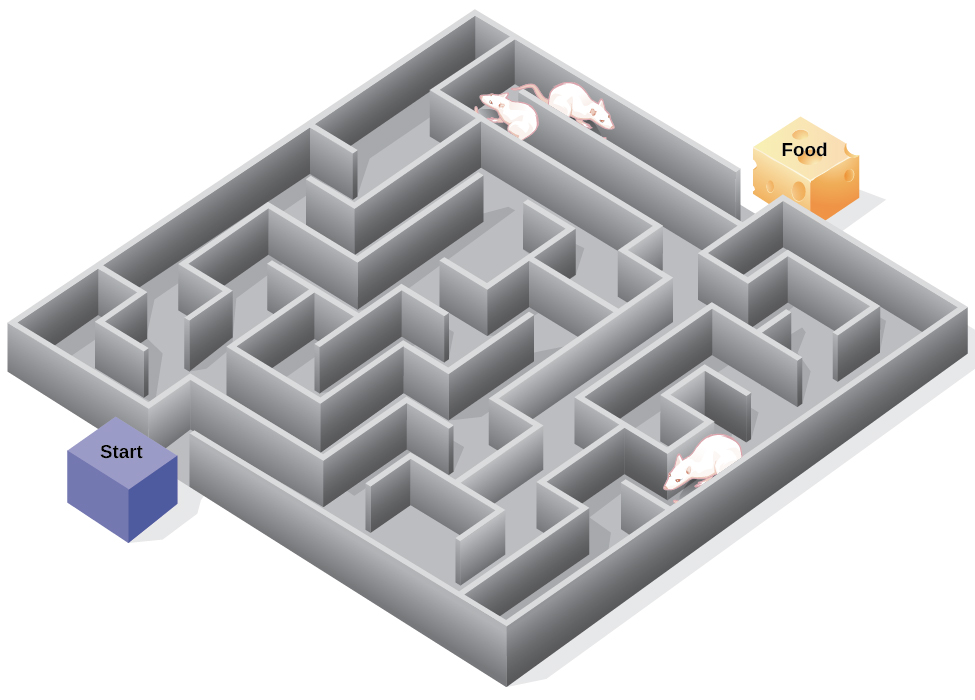

The previous section of this affiliate focused on the type of associative learning known as classical conditioning. Remember that in classical conditioning, something in the environment triggers a reflex automatically, and researchers railroad train the organism to react to a different stimulus. Now we turn to the 2nd type of associative learning, operant conditioning. In operant workout, organisms learn to associate a beliefs and its consequence ([link]). A pleasant consequence makes that behavior more than likely to be repeated in the future. For example, Spirit, a dolphin at the National Aquarium in Baltimore, does a flip in the air when her trainer blows a whistle. The consequence is that she gets a fish.

| Classical Conditioning | Operant Conditioning | |

|---|---|---|

| Conditioning approach | An unconditioned stimulus (such every bit nutrient) is paired with a neutral stimulus (such as a bell). The neutral stimulus eventually becomes the conditioned stimulus, which brings nigh the conditioned response (salivation). | The target behavior is followed by reinforcement or punishment to either strengthen or weaken it, so that the learner is more than likely to showroom the desired behavior in the future. |

| Stimulus timing | The stimulus occurs immediately before the response. | The stimulus (either reinforcement or punishment) occurs soon afterward the response. |

Psychologist B. F. Skinner saw that classical conditioning is limited to existing behaviors that are reflexively elicited, and it doesn't account for new behaviors such as riding a bike. He proposed a theory about how such behaviors come about. Skinner believed that beliefs is motivated by the consequences we receive for the behavior: the reinforcements and punishments. His thought that learning is the consequence of consequences is based on the law of effect, which was first proposed by psychologist Edward Thorndike. Co-ordinate to the police force of upshot, behaviors that are followed by consequences that are satisfying to the organism are more probable to exist repeated, and behaviors that are followed by unpleasant consequences are less likely to be repeated (Thorndike, 1911). Substantially, if an organism does something that brings almost a desired result, the organism is more than likely to do it again. If an organism does something that does not bring well-nigh a desired result, the organism is less likely to do it over again. An example of the police of event is in employment. One of the reasons (and oft the main reason) we show upwardly for piece of work is because nosotros go paid to do so. If we terminate getting paid, nosotros will probable stop showing up—fifty-fifty if we dear our job.

Working with Thorndike's law of event as his foundation, Skinner began conducting scientific experiments on animals (mainly rats and pigeons) to decide how organisms learn through operant conditioning (Skinner, 1938). He placed these animals inside an operant conditioning chamber, which has come up to exist known as a "Skinner box" ([link]). A Skinner box contains a lever (for rats) or disk (for pigeons) that the animal tin press or peck for a food reward via the dispenser. Speakers and lights tin be associated with certain behaviors. A recorder counts the number of responses made past the animal.

(a) B. F. Skinner developed operant conditioning for systematic report of how behaviors are strengthened or weakened according to their consequences. (b) In a Skinner box, a rat presses a lever in an operant workout bedchamber to receive a food reward. (credit a: modification of piece of work past "Silly rabbit"/Wikimedia Commons)

Link to Learning

Watch this brief video clip to learn more about operant conditioning: Skinner is interviewed, and operant workout of pigeons is demonstrated.

In discussing operant conditioning, we utilise several everyday words—positive, negative, reinforcement, and penalization—in a specialized manner. In operant workout, positive and negative do non mean good and bad. Instead, positive means you are adding something, and negative ways you are taking something away. Reinforcement ways you lot are increasing a behavior, and punishment means yous are decreasing a beliefs. Reinforcement tin be positive or negative, and penalization can too be positive or negative. All reinforcers (positive or negative) increase the likelihood of a behavioral response. All punishers (positive or negative) decrease the likelihood of a behavioral response. At present let's combine these 4 terms: positive reinforcement, negative reinforcement, positive punishment, and negative punishment ([link]).

| Reinforcement | Punishment | |

|---|---|---|

| Positive | Something is added to increase the likelihood of a behavior. | Something is added to decrease the likelihood of a beliefs. |

| Negative | Something is removed to increase the likelihood of a behavior. | Something is removed to decrease the likelihood of a behavior. |

REINFORCEMENT

The most constructive style to teach a person or brute a new behavior is with positive reinforcement. In positive reinforcement, a desirable stimulus is added to increment a behavior.

For example, yous tell your five-year-erstwhile son, Jerome, that if he cleans his room, he will get a toy. Jerome quickly cleans his room because he wants a new fine art gear up. Allow's pause for a moment. Some people might say, "Why should I reward my kid for doing what is expected?" But in fact nosotros are constantly and consistently rewarded in our lives. Our paychecks are rewards, as are high grades and acceptance into our preferred schoolhouse. Being praised for doing a good job and for passing a commuter's examination is also a reward. Positive reinforcement as a learning tool is extremely effective. It has been constitute that ane of the about constructive ways to increase achievement in school districts with beneath-average reading scores was to pay the children to read. Specifically, second-grade students in Dallas were paid $ii each time they read a book and passed a brusk quiz about the book. The issue was a significant increase in reading comprehension (Fryer, 2010). What practise y'all think nigh this plan? If Skinner were alive today, he would probably recall this was a swell thought. He was a strong proponent of using operant conditioning principles to influence students' beliefs at school. In fact, in improver to the Skinner box, he too invented what he chosen a education machine that was designed to reward small steps in learning (Skinner, 1961)—an early on forerunner of computer-assisted learning. His teaching machine tested students' knowledge as they worked through various school subjects. If students answered questions correctly, they received immediate positive reinforcement and could continue; if they answered incorrectly, they did not receive whatsoever reinforcement. The idea was that students would spend boosted time studying the material to increase their chance of beingness reinforced the next time (Skinner, 1961).

In negative reinforcement, an undesirable stimulus is removed to increment a behavior. For instance, car manufacturers employ the principles of negative reinforcement in their seatbelt systems, which become "beep, beep, beep" until you fasten your seatbelt. The annoying sound stops when yous exhibit the desired behavior, increasing the likelihood that yous will buckle up in the futurity. Negative reinforcement is also used oft in horse grooming. Riders use pressure—by pulling the reins or squeezing their legs—and so remove the pressure when the equus caballus performs the desired beliefs, such as turning or speeding upwards. The pressure is the negative stimulus that the horse wants to remove.

Penalisation

Many people confuse negative reinforcement with penalty in operant workout, merely they are two very unlike mechanisms. Think that reinforcement, even when it is negative, always increases a behavior. In contrast, punishment ever decreases a beliefs. In positive penalization, y'all add an undesirable stimulus to decrease a behavior. An example of positive punishment is scolding a student to get the student to terminate texting in grade. In this instance, a stimulus (the reprimand) is added in order to decrease the behavior (texting in form). In negative punishment, you remove a pleasant stimulus to decrease a behavior. For example, a driver might blast her horn when a light turns light-green, and proceed diggings the horn until the car in front moves.

Punishment, peculiarly when it is firsthand, is ane way to subtract undesirable beliefs. For instance, imagine your 4-yr-old son, Brandon, runs into the busy street to go his ball. You give him a time-out (positive penalty) and tell him never to go into the street again. Chances are he won't repeat this behavior. While strategies like time-outs are common today, in the by children were often bailiwick to concrete punishment, such as spanking. It'south of import to be aware of some of the drawbacks in using physical punishment on children. First, penalisation may teach fear. Brandon may become fearful of the street, but he also may become fearful of the person who delivered the punishment—yous, his parent. Similarly, children who are punished past teachers may come to fright the teacher and try to avoid schoolhouse (Gershoff et al., 2010). Consequently, near schools in the Usa have banned corporal punishment. Second, punishment may cause children to become more aggressive and prone to antisocial behavior and delinquency (Gershoff, 2002). They come across their parents resort to spanking when they go angry and frustrated, then, in turn, they may act out this same behavior when they become aroused and frustrated. For example, because yous spank Brenda when y'all are angry with her for her misbehavior, she might start hitting her friends when they won't share their toys.

While positive punishment can exist effective in some cases, Skinner suggested that the use of punishment should exist weighed against the possible negative effects. Today'south psychologists and parenting experts favor reinforcement over punishment—they recommend that you catch your child doing something skillful and reward her for it.

Shaping

In his operant workout experiments, Skinner often used an approach called shaping. Instead of rewarding but the target behavior, in shaping, we reward successive approximations of a target behavior. Why is shaping needed? Remember that in order for reinforcement to piece of work, the organism must showtime display the behavior. Shaping is needed considering it is extremely unlikely that an organism will brandish annihilation but the simplest of behaviors spontaneously. In shaping, behaviors are broken down into many small, achievable steps. The specific steps used in the process are the following:

Reinforce any response that resembles the desired behavior.

And so reinforce the response that more closely resembles the desired behavior. You will no longer reinforce the previously reinforced response.

Next, begin to reinforce the response that even more than closely resembles the desired behavior.

Go along to reinforce closer and closer approximations of the desired behavior.

Finally, only reinforce the desired behavior.

Shaping is often used in teaching a complex behavior or chain of behaviors. Skinner used shaping to teach pigeons non merely such relatively elementary behaviors every bit pecking a disk in a Skinner box, but as well many unusual and entertaining behaviors, such as turning in circles, walking in effigy eights, and even playing ping pong; the technique is commonly used by animal trainers today. An of import part of shaping is stimulus discrimination. Remember Pavlov's dogs—he trained them to answer to the tone of a bell, and not to like tones or sounds. This discrimination is also important in operant conditioning and in shaping behavior.

Link to Learning

Here is a cursory video of Skinner's pigeons playing ping pong.

It'south easy to run across how shaping is effective in teaching behaviors to animals, only how does shaping work with humans? Let'due south consider parents whose goal is to have their child learn to clean his room. They use shaping to help him master steps toward the goal. Instead of performing the entire task, they set these steps and reinforce each step. Kickoff, he cleans up 1 toy. Second, he cleans up 5 toys. Third, he chooses whether to selection up ten toys or put his books and apparel abroad. Quaternary, he cleans up everything except two toys. Finally, he cleans his entire room.

PRIMARY AND SECONDARY REINFORCERS

Rewards such as stickers, praise, money, toys, and more can be used to reinforce learning. Let's get back to Skinner'southward rats again. How did the rats larn to press the lever in the Skinner box? They were rewarded with food each time they pressed the lever. For animals, food would exist an obvious reinforcer.

What would be a proficient reinforce for humans? For your daughter Sydney, information technology was the promise of a toy if she cleaned her room. How nearly Joaquin, the soccer thespian? If you gave Joaquin a piece of candy every time he fabricated a goal, y'all would be using a primary reinforcer. Principal reinforcers are reinforcers that have innate reinforcing qualities. These kinds of reinforcers are not learned. H2o, food, slumber, shelter, sexual practice, and touch, amongst others, are primary reinforcers. Pleasure is besides a primary reinforcer. Organisms do non lose their bulldoze for these things. For virtually people, jumping in a cool lake on a very hot twenty-four hour period would be reinforcing and the cool lake would be innately reinforcing—the h2o would cool the person off (a concrete need), also as provide pleasure.

A secondary reinforcer has no inherent value and only has reinforcing qualities when linked with a master reinforcer. Praise, linked to amore, is 1 example of a secondary reinforcer, every bit when you called out "Great shot!" every time Joaquin fabricated a goal. Some other example, money, is but worth something when you tin use it to buy other things—either things that satisfy bones needs (food, water, shelter—all master reinforcers) or other secondary reinforcers. If you were on a remote island in the eye of the Pacific Ocean and you had stacks of coin, the money would not exist useful if you could not spend information technology. What about the stickers on the behavior chart? They also are secondary reinforcers.

Sometimes, instead of stickers on a sticker nautical chart, a token is used. Tokens, which are also secondary reinforcers, can and so be traded in for rewards and prizes. Entire behavior management systems, known as token economies, are congenital around the apply of these kinds of token reinforcers. Token economies have been establish to be very effective at modifying beliefs in a diverseness of settings such every bit schools, prisons, and mental hospitals. For example, a study past Cangi and Daly (2013) found that use of a token economy increased appropriate social behaviors and reduced inappropriate behaviors in a group of autistic school children. Autistic children tend to exhibit disruptive behaviors such every bit pinching and hitting. When the children in the study exhibited appropriate beliefs (not hit or pinching), they received a "placidity hands" token. When they hitting or pinched, they lost a token. The children could and so exchange specified amounts of tokens for minutes of playtime.

Everyday Connection: Behavior Modification in Children

Parents and teachers often use behavior modification to change a child's behavior. Behavior modification uses the principles of operant conditioning to accomplish beliefs change so that undesirable behaviors are switched for more socially acceptable ones. Some teachers and parents create a sticker chart, in which several behaviors are listed ([link]). Sticker charts are a form of token economies, equally described in the text. Each time children perform the behavior, they become a sticker, and afterwards a certain number of stickers, they get a prize, or reinforcer. The goal is to increase adequate behaviors and decrease misbehavior. Remember, information technology is best to reinforce desired behaviors, rather than to employ penalization. In the classroom, the teacher can reinforce a wide range of behaviors, from students raising their hands, to walking quietly in the hall, to turning in their homework. At home, parents might create a behavior nautical chart that rewards children for things such as putting away toys, brushing their teeth, and helping with dinner. In social club for behavior modification to exist effective, the reinforcement needs to be continued with the behavior; the reinforcement must affair to the child and be washed consistently.

Sticker charts are a form of positive reinforcement and a tool for beliefs modification. Once this little girl earns a certain number of stickers for demonstrating a desired behavior, she will exist rewarded with a trip to the ice cream parlor. (credit: Abigail Batchelder)

Time-out is another popular technique used in beliefs modification with children. It operates on the principle of negative penalization. When a child demonstrates an undesirable behavior, she is removed from the desirable activeness at hand ([link]). For example, say that Sophia and her brother Mario are playing with edifice blocks. Sophia throws some blocks at her blood brother, so you requite her a warning that she volition go to time-out if she does it again. A few minutes later, she throws more blocks at Mario. You lot remove Sophia from the room for a few minutes. When she comes back, she doesn't throw blocks.

There are several important points that you lot should know if you program to implement time-out as a behavior modification technique. First, brand sure the kid is being removed from a desirable activeness and placed in a less desirable location. If the activity is something undesirable for the kid, this technique will backfire considering it is more enjoyable for the child to exist removed from the action. 2nd, the length of the fourth dimension-out is important. The general dominion of thumb is ane minute for each year of the child's historic period. Sophia is five; therefore, she sits in a fourth dimension-out for 5 minutes. Setting a timer helps children know how long they have to sit in time-out. Finally, as a caregiver, keep several guidelines in mind over the course of a time-out: remain calm when directing your child to fourth dimension-out; ignore your child during time-out (because caregiver attention may reinforce misbehavior); and give the child a hug or a kind word when fourth dimension-out is over.

Time-out is a popular form of negative punishment used by caregivers. When a child misbehaves, he or she is removed from a desirable action in an effort to decrease the unwanted beliefs. For example, (a) a child might be playing on the playground with friends and push button another kid; (b) the child who misbehaved would so be removed from the activity for a curt period of time. (credit a: modification of work past Simone Ramella; credit b: modification of work by "JefferyTurner"/Flickr)

REINFORCEMENT SCHEDULES

Remember, the best style to teach a person or fauna a behavior is to use positive reinforcement. For example, Skinner used positive reinforcement to teach rats to press a lever in a Skinner box. At outset, the rat might randomly hit the lever while exploring the box, and out would come a pellet of food. Later eating the pellet, what do you retrieve the hungry rat did side by side? It hitting the lever again, and received some other pellet of food. Each time the rat striking the lever, a pellet of nutrient came out. When an organism receives a reinforcer each time information technology displays a behavior, information technology is called continuous reinforcement. This reinforcement schedule is the quickest way to teach someone a behavior, and it is especially effective in training a new behavior. Permit's look back at the dog that was learning to sit before in the chapter. Now, each fourth dimension he sits, you requite him a treat. Timing is important here: y'all will be most successful if you present the reinforcer immediately after he sits, so that he tin make an association between the target behavior (sitting) and the consequence (getting a treat).

Link to Learning

Picket this video clip where veterinarian Dr. Sophia Yin shapes a canis familiaris's behavior using the steps outlined higher up.

Once a beliefs is trained, researchers and trainers frequently plow to another type of reinforcement schedule—partial reinforcement. In fractional reinforcement, as well referred to every bit intermittent reinforcement, the person or animal does not get reinforced every time they perform the desired behavior. In that location are several different types of partial reinforcement schedules ([link]). These schedules are described as either stock-still or variable, and every bit either interval or ratio. Fixed refers to the number of responses between reinforcements, or the amount of time between reinforcements, which is set and unchanging. Variable refers to the number of responses or corporeality of time between reinforcements, which varies or changes. Interval means the schedule is based on the time between reinforcements, and ratio means the schedule is based on the number of responses between reinforcements.

| Reinforcement Schedule | Description | Consequence | Instance |

|---|---|---|---|

| Fixed interval | Reinforcement is delivered at anticipated fourth dimension intervals (e.g., later on 5, ten, 15, and 20 minutes). | Moderate response rate with significant pauses afterward reinforcement | Hospital patient uses patient-controlled, doctor-timed hurting relief |

| Variable interval | Reinforcement is delivered at unpredictable time intervals (e.g., subsequently v, 7, 10, and xx minutes). | Moderate yet steady response rate | Checking Facebook |

| Stock-still ratio | Reinforcement is delivered after a anticipated number of responses (e.g., afterwards 2, iv, 6, and 8 responses). | High response charge per unit with pauses after reinforcement | Piecework—manufacturing plant worker getting paid for every ten number of items manufactured |

| Variable ratio | Reinforcement is delivered later an unpredictable number of responses (eastward.g., after 1, iv, 5, and nine responses). | High and steady response rate | Gambling |

Now let'due south combine these iv terms. A stock-still interval reinforcement schedule is when behavior is rewarded after a set amount of fourth dimension. For example, June undergoes major surgery in a hospital. During recovery, she is expected to experience pain and will require prescription medications for pain relief. June is given an IV baste with a patient-controlled painkiller. Her doctor sets a limit: one dose per 60 minutes. June pushes a button when pain becomes difficult, and she receives a dose of medication. Since the reward (pain relief) only occurs on a fixed interval, there is no betoken in exhibiting the behavior when it will not be rewarded.

With a variable interval reinforcement schedule, the person or animal gets the reinforcement based on varying amounts of time, which are unpredictable. Say that Manuel is the manager at a fast-nutrient eating house. Every in one case in a while someone from the quality control sectionalization comes to Manuel's eating house. If the restaurant is clean and the service is fast, everyone on that shift earns a $20 bonus. Manuel never knows when the quality control person will bear witness up, and so he always tries to keep the restaurant clean and ensures that his employees provide prompt and courteous service. His productivity regarding prompt service and keeping a clean eatery are steady because he wants his crew to earn the bonus.

With a stock-still ratio reinforcement schedule, there are a set number of responses that must occur before the behavior is rewarded. Carla sells glasses at an eyeglass store, and she earns a commission every time she sells a pair of spectacles. She always tries to sell people more pairs of spectacles, including prescription sunglasses or a fill-in pair, so she tin increment her committee. She does not care if the person really needs the prescription sunglasses, Carla simply wants her bonus. The quality of what Carla sells does non matter considering her commission is non based on quality; it's only based on the number of pairs sold. This distinction in the quality of performance can help decide which reinforcement method is virtually appropriate for a particular situation. Stock-still ratios are meliorate suited to optimize the quantity of output, whereas a fixed interval, in which the advantage is not quantity based, tin lead to a higher quality of output.

In a variable ratio reinforcement schedule, the number of responses needed for a advantage varies. This is the most powerful fractional reinforcement schedule. An instance of the variable ratio reinforcement schedule is gambling. Imagine that Sarah—more often than not a smart, thrifty adult female—visits Las Vegas for the first time. She is non a gambler, simply out of curiosity she puts a quarter into the slot machine, and then some other, and some other. Nothing happens. Two dollars in quarters later, her marvel is fading, and she is just about to quit. But then, the machine lights up, bells go off, and Sarah gets 50 quarters dorsum. That's more like information technology! Sarah gets back to inserting quarters with renewed interest, and a few minutes later she has used upwards all her gains and is $10 in the hole. At present might be a sensible time to quit. And yet, she keeps putting money into the slot motorcar because she never knows when the next reinforcement is coming. She keeps thinking that with the next quarter she could win $l, or $100, or even more than. Because the reinforcement schedule in well-nigh types of gambling has a variable ratio schedule, people keep trying and hoping that the next fourth dimension they will win large. This is one of the reasons that gambling is so addictive—and so resistant to extinction.

In operant conditioning, extinction of a reinforced behavior occurs at some betoken after reinforcement stops, and the speed at which this happens depends on the reinforcement schedule. In a variable ratio schedule, the point of extinction comes very slowly, as described in a higher place. But in the other reinforcement schedules, extinction may come quickly. For example, if June presses the push for the pain relief medication before the allotted fourth dimension her physician has canonical, no medication is administered. She is on a fixed interval reinforcement schedule (dosed hourly), and so extinction occurs speedily when reinforcement doesn't come up at the expected time. Among the reinforcement schedules, variable ratio is the most productive and the well-nigh resistant to extinction. Fixed interval is the least productive and the easiest to extinguish ([link]).

The four reinforcement schedules yield dissimilar response patterns. The variable ratio schedule is unpredictable and yields high and steady response rates, with fiddling if any pause after reinforcement (e.g., gambler). A stock-still ratio schedule is anticipated and produces a loftier response rate, with a short pause later on reinforcement (east.g., eyeglass saleswoman). The variable interval schedule is unpredictable and produces a moderate, steady response rate (due east.g., restaurant manager). The stock-still interval schedule yields a scallop-shaped response blueprint, reflecting a significant break after reinforcement (e.g., surgery patient).

Connect the Concepts: Gambling and the Brain

Skinner (1953) stated, "If the gambling institution cannot persuade a patron to turn over money with no render, information technology may reach the same effect by returning part of the patron's money on a variable-ratio schedule" (p. 397).

Skinner uses gambling as an example of the ability and effectiveness of conditioning behavior based on a variable ratio reinforcement schedule. In fact, Skinner was then confident in his cognition of gambling addiction that he even claimed he could plow a pigeon into a pathological gambler ("Skinner's Utopia," 1971). Across the power of variable ratio reinforcement, gambling seems to piece of work on the brain in the same style every bit some addictive drugs. The Illinois Found for Addiction Recovery (n.d.) reports evidence suggesting that pathological gambling is an addiction similar to a chemic habit ([link]). Specifically, gambling may activate the reward centers of the brain, much like cocaine does. Inquiry has shown that some pathological gamblers have lower levels of the neurotransmitter (brain chemical) known as norepinephrine than do normal gamblers (Roy, et al., 1988). According to a study conducted by Alec Roy and colleagues, norepinephrine is secreted when a person feels stress, arousal, or thrill; pathological gamblers use gambling to increment their levels of this neurotransmitter. Some other researcher, neuroscientist Hans Breiter, has washed extensive research on gambling and its effects on the encephalon. Breiter (as cited in Franzen, 2001) reports that "Monetary reward in a gambling-like experiment produces brain activation very like to that observed in a cocaine addict receiving an infusion of cocaine" (para. 1). Deficiencies in serotonin (some other neurotransmitter) might besides contribute to compulsive behavior, including a gambling addiction.

It may be that pathological gamblers' brains are different than those of other people, and perhaps this difference may somehow have led to their gambling addiction, as these studies seem to propose. Even so, it is very difficult to ascertain the cause because it is impossible to behave a true experiment (it would exist unethical to try to turn randomly assigned participants into problem gamblers). Therefore, it may be that causation really moves in the opposite direction—perhaps the act of gambling somehow changes neurotransmitter levels in some gamblers' brains. It also is possible that some disregarded gene, or misreckoning variable, played a role in both the gambling addiction and the differences in encephalon chemistry.

Some research suggests that pathological gamblers use gambling to recoup for abnormally low levels of the hormone norepinephrine, which is associated with stress and is secreted in moments of arousal and thrill. (credit: Ted Irish potato)

COGNITION AND LATENT LEARNING

Although strict behaviorists such every bit Skinner and Watson refused to believe that cognition (such as thoughts and expectations) plays a part in learning, another behaviorist, Edward C. Tolman, had a different opinion. Tolman's experiments with rats demonstrated that organisms can larn even if they practice not receive immediate reinforcement (Tolman & Honzik, 1930; Tolman, Ritchie, & Kalish, 1946). This finding was in conflict with the prevailing idea at the time that reinforcement must be immediate in order for learning to occur, thus suggesting a cognitive aspect to learning.

In the experiments, Tolman placed hungry rats in a maze with no reward for finding their fashion through it. He likewise studied a comparison grouping that was rewarded with food at the end of the maze. As the unreinforced rats explored the maze, they developed a cognitive map: a mental movie of the layout of the maze ([link]). After 10 sessions in the maze without reinforcement, food was placed in a goal box at the end of the maze. Equally soon every bit the rats became aware of the food, they were able to observe their way through the maze quickly, just as rapidly as the comparison group, which had been rewarded with food all along. This is known as latent learning: learning that occurs but is non observable in behavior until there is a reason to demonstrate it.

Psychologist Edward Tolman found that rats use cerebral maps to navigate through a maze. Take yous ever worked your way through diverse levels on a video game? Yous learned when to plough left or correct, move upwardly or downwardly. In that case you were relying on a cognitive map, just similar the rats in a maze. (credit: modification of work past "FutUndBeidl"/Flickr)

Latent learning likewise occurs in humans. Children may acquire by watching the deportment of their parents but only demonstrate it at a later engagement, when the learned material is needed. For example, suppose that Ravi's dad drives him to school every solar day. In this fashion, Ravi learns the route from his house to his school, only he's never driven there himself, so he has not had a hazard to demonstrate that he'south learned the style. One morning time Ravi's dad has to leave early for a meeting, so he can't drive Ravi to school. Instead, Ravi follows the same route on his bike that his dad would take taken in the machine. This demonstrates latent learning. Ravi had learned the route to school, but had no need to demonstrate this cognition earlier.

Everyday Connexion: This Place Is Like a Maze

Accept you ever gotten lost in a building and couldn't detect your way back out? While that tin can exist frustrating, yous're not alone. At one time or another we've all gotten lost in places like a museum, hospital, or university library. Whenever we go someplace new, nosotros build a mental representation—or cognitive map—of the location, every bit Tolman'due south rats built a cognitive map of their maze. Nonetheless, some buildings are confusing because they include many areas that look alike or take short lines of sight. Because of this, it's frequently difficult to predict what'southward effectually a corner or decide whether to turn left or right to go out of a building. Psychologist Laura Carlson (2010) suggests that what we identify in our cognitive map tin impact our success in navigating through the environs. She suggests that paying attention to specific features upon entering a building, such every bit a picture on the wall, a fountain, a statue, or an escalator, adds information to our cognitive map that can be used after to assist find our way out of the edifice.

Link to Learning

Watch this video to acquire more about Carlson's studies on cognitive maps and navigation in buildings.

Summary

Operant conditioning is based on the work of B. F. Skinner. Operant conditioning is a grade of learning in which the motivation for a beliefs happens afterwards the beliefs is demonstrated. An creature or a human receives a result after performing a specific beliefs. The consequence is either a reinforcer or a punisher. All reinforcement (positive or negative) increases the likelihood of a behavioral response. All punishment (positive or negative) decreases the likelihood of a behavioral response. Several types of reinforcement schedules are used to reward behavior depending on either a gear up or variable period of time.

Self Check Questions

Critical Thinking Questions

1. What is a Skinner box and what is its purpose?

ii. What is the divergence between negative reinforcement and punishment?

3. What is shaping and how would you use shaping to teach a canis familiaris to roll over?

Personal Application Questions

4. Explicate the difference betwixt negative reinforcement and punishment, and provide several examples of each based on your ain experiences.

5. Call up of a behavior that you lot have that you lot would like to change. How could you use beliefs modification, specifically positive reinforcement, to modify your behavior? What is your positive reinforcer?

Answers

1. A Skinner box is an operant conditioning chamber used to railroad train animals such equally rats and pigeons to perform sure behaviors, like pressing a lever. When the animals perform the desired behavior, they receive a reward: food or h2o.

2. In negative reinforcement you are taking away an undesirable stimulus in lodge to increase the frequency of a certain beliefs (e.g., buckling your seat belt stops the abrasive beeping sound in your car and increases the likelihood that you will clothing your seatbelt). Penalization is designed to reduce a behavior (e.g., you scold your child for running into the street in order to decrease the unsafe behavior.)

three. Shaping is an operant workout method in which you advantage closer and closer approximations of the desired behavior. If y'all want to teach your domestic dog to curlicue over, you lot might reward him first when he sits, so when he lies downwards, so when he lies down and rolls onto his dorsum. Finally, you would reward him only when he completes the unabridged sequence: lying down, rolling onto his back, and then continuing to roll over to his other side.

Glossary

cognitive map mental pic of the layout of the environment

continuous reinforcement rewarding a behavior every time it occurs

fixed interval reinforcement schedule behavior is rewarded after a set corporeality of time

fixed ratio reinforcement schedule set number of responses must occur before a behavior is rewarded

latent learning learning that occurs, but information technology may not be evident until at that place is a reason to demonstrate it

law of consequence behavior that is followed past consequences satisfying to the organism volition be repeated and behaviors that are followed by unpleasant consequences will be discouraged

negative punishment taking away a pleasant stimulus to decrease or stop a behavior

negative reinforcement taking away an undesirable stimulus to increment a behavior

operant conditioning class of learning in which the stimulus/experience happens after the behavior is demonstrated

partial reinforcement rewarding behavior only some of the fourth dimension

positive punishment calculation an undesirable stimulus to stop or decrease a behavior

positive reinforcement calculation a desirable stimulus to increment a behavior

primary reinforcer has innate reinforcing qualities (eastward.g., food, water, shelter, sex)

penalty implementation of a issue in order to decrease a behavior

reinforcement implementation of a issue in order to increase a beliefs

secondary reinforcer has no inherent value unto itself and simply has reinforcing qualities when linked with something else (e.g., money, gold stars, poker chips)

shaping rewarding successive approximations toward a target behavior

variable interval reinforcement schedule beliefs is rewarded after unpredictable amounts of time accept passed

variable ratio reinforcement schedule number of responses differ before a behavior is rewarded

Source: https://courses.lumenlearning.com/wsu-sandbox/chapter/operant-conditioning/

Postar um comentário for "Any Consequence That Decreases the Likelihood of a Behavior Occurring Again Is"